Like FreeBSD Jails and Solaris Zones, Linux containers are self-contained execution environments—with their own, isolated CPU, memory, block I/O, and network resources—that share the kernel of the host operating system. The result is something that feels like a virtual machine, but sheds all the weight and startup overhead of a guest operating system.

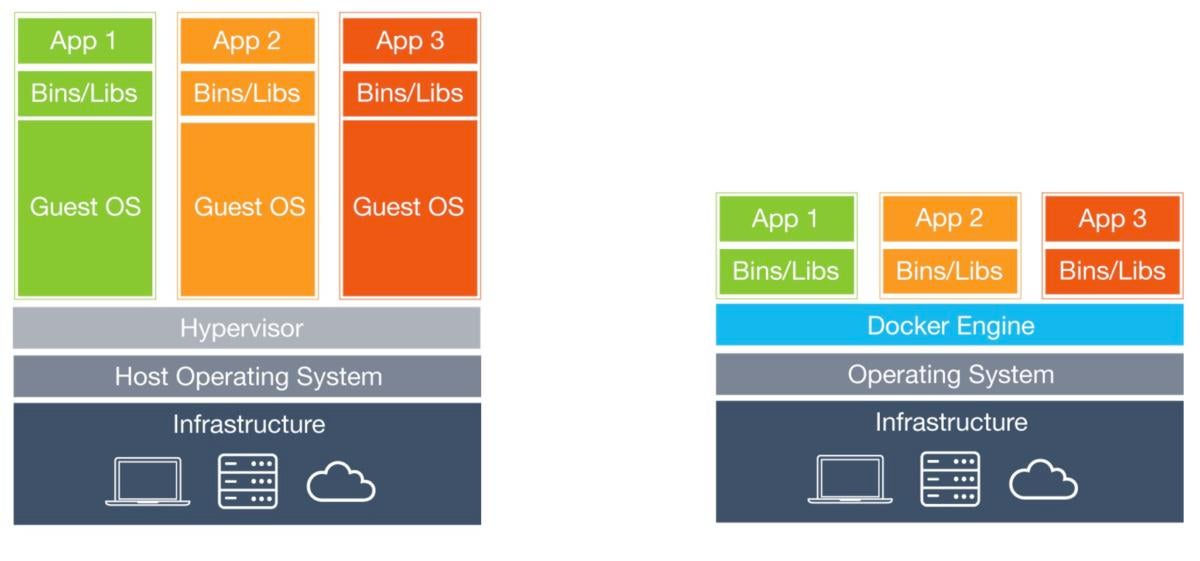

In a large-scale system, running VMs would mean you are probably running many duplicate instances of the same OS and many redundant boot volumes. Because containers are more streamlined and lightweight compared to VMs, you may be able to run six to eight times as many containers as VMs on the same hardware

In an application environment that has web-scale requirements, containers are an appealing proposition compared to traditional server virtualization.

To understand containers, we have to start with Linux cgroups and namespaces, the Linux kernel features that create the walls between containers and other processes running on the host. Linux namespaces, originally developed by IBM, wrap a set of system resources and present them to a process to make it look like they are dedicated to that process.

Linux cgroups, originally developed by Google, govern the isolation and usage of system resources, such as CPU and memory, for a group of processes. For example, if you have an application that takes up a lot of CPU cycles and memory, such as a scientific computing application, you can put the application in a cgroup to limit its CPU and memory usage.

Namespaces deal with resource isolation for a single process, while cgroups manage resources for a group of processes.

Docker

DockerFrom LXC to Docker

The original Linux container technology is Linux Containers, commonly known as LXC. LXC is a Linux operating system level virtualization method for running multiple isolated Linux systems on a single host. Namespaces and cgroups make LXC possible.

Containers decouple applications from operating systems, which means that users can have a clean and minimal Linux operating system and run everything else in one or more isolated container.

Also, because the operating system is abstracted away from containers, you can move a container across any Linux server that supports the container runtime environment.

Docker, which started as a project to build single-application LXC containers, introduced several significant changes to LXC that make containers more portable and flexible to use. Using Docker containers, you can deploy, replicate, move, and back up a workload even more quickly and easily than you can do so using virtual machines. Basically, Docker brings cloudlike flexibility to any infrastructure capable of running containers.

Thus, Docker is often credited for the surging popularity of modern-day containers. We’ll look at Docker in some detail and discuss how Docker is different from LXC in the section below.

Dipping into Docker

Although Docker started out as an open source project to build specialized LXC, it later morphed into its own container runtime environment. At a high level, Docker is a Linux utility that can efficiently create, ship, and run containers.

Fundamentally, both Docker and LXC containers are user-space lightweight virtualization mechanisms that implement cgroups and namespaces to manage resource isolation. There are, however, a number of key differences between Docker containers and LXC. In particular:

Single vs. multiprocess. Docker restricts containers to run as a single process. If your application environment consists of X concurrent processes, Docker wants you to run X containers, each with a distinct process. By contrast, LXC containers have a conventional init process and can run multiple processes.

To run a simple multi-tier web application in Docker, you would need a PHP container, an Nginx container (the web server), a MySQL container (for the database process), and a few data containers for storing the database tables and other application data.

The advantages of single-process containers are many, including easy and more granular updates. Why shut down the database process when all you wanted to update is the web server? Also, single-process containers represent an efficient architecture for building microservices-based applications.

There are also limitations to single-process containers. For instance, you can’t run agents, logging scripts, or an SSH daemon inside the container. Also, it’s not easy to commit small, application-level changes to a single-process container. You are essentially forced to start a new, updated container.

Stateless vs. stateful. Docker containers are designed to be stateless, more so than LXC. First, Docker does not support persistent storage. Docker gets around this by allowing you to mount host storage as a “Docker volume” from your containers. Because the volumes are mounted, they are not really part of the container environment.

Second, Docker containers consist of read-only layers. This means that, once the container image has been created, it does not change. During runtime, if the process in a container makes changes to its internal state, a “diff” is made between the internal state and the image from which the container was created. If you run the docker commit command, the diff becomes part of a new image—not the original image, but a new image, from which you can create new containers. Otherwise, if you delete the container, the diff disappears.

A stateless container is an interesting entity. You can make updates to a container, but a series of updates will engender a series of new container images, so system rollbacks are easy.

Portability. This is perhaps the single most important advance of Docker over LXC. Docker abstracts away more networking, storage, and OS details from the application than LXC does. With Docker, the application is truly independent from the configurations of these low-level resources. When you move a Docker container from one Docker host to another Docker-enabled machine, Docker guarantees that the environment for the application will remain the same.

A direct benefit of this approach is that Docker enables developers to set up local development environments that are exactly like a production server. When a developer finishes writing and testing his code, he can wrap it in a container and publish it directly to an AWS server or to his private cloud, and it will instantly work because the environment is the same.

Even with LXC, a developer can get something running on his own machine, but discover that it doesn’t run properly when he deploys to the server; the server environment will be different, requiring the developer to spend an enormous amount of time debugging the difference and fixing the issue.

Docker took away that complexity. This is what makes Docker containers so portable and easy to use across different cloud and virtualization environments.

A developer-friendly architecture

Decoupling applications from the underlying hardware is the fundamental concept behind virtualization. Containers go a step further and decouple applications from the underlying OS. This enables cloudlike flexibility, including portability and efficient scaling. Containers bring another level of efficiency, portability, and deployment flexibility to developers beyond virtualization.

The popularity of containers underscores the fact that this is the developer-driven era. If cloud was about infrastructure innovation and mobile about usability innovation, the container is the much-needed force multiplier for developers.

Chenxi Wang is an experienced technology/strategy executive with a deep technical background (Ph.D. Computer Science). Formerly the chief strategy officer for container security firm Twistlock, she now works as a startup advisor and angel investor.